|

Jiacheng Xie I'm a Ph.D student and research assistant at Digital Biology in University of Missouri. |

|

ResearchI'm interested in medical imaging analysis, clinical decision support systems, and the application of artificial intelligence in medicine and healthcare. My recent work also explores epidemiological insights derived from social media data. Much of my research focuses on building AI systems that can assist clinical diagnosis, treatment planning, and public health surveillance. Selected projects and publications are highlighted below. |

|

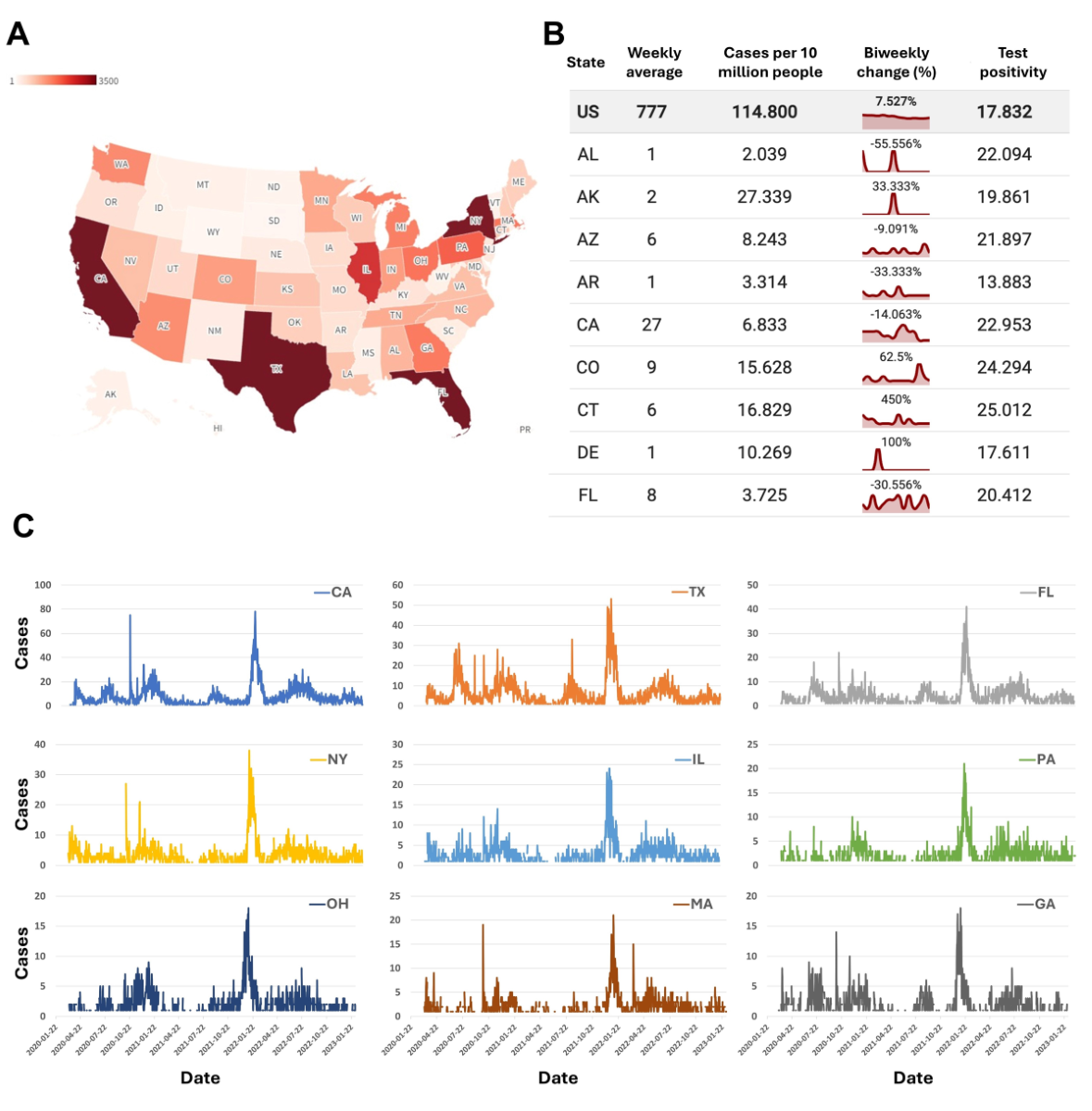

Leveraging Large Language Models for Infectious Disease Surveillance—Using

a Web Service for Monitoring COVID-19 Patterns From Self-Reporting Tweets: Content Analysis

Journal of Medical Internet Research, 2025 project page / paper In this work, we developed a real-time COVID-19 surveillance system based on self-reported cases from Twitter, using large language models to automatically detect infections, symptoms, recoveries, and reinfections. Our system predicted national case trends an average of 7.6 days earlier than official reports and uncovered rare symptoms not listed by the CDC. We deployed these findings through an interactive platform, Covlab, to support public health decision-making and early outbreak detection. |

|

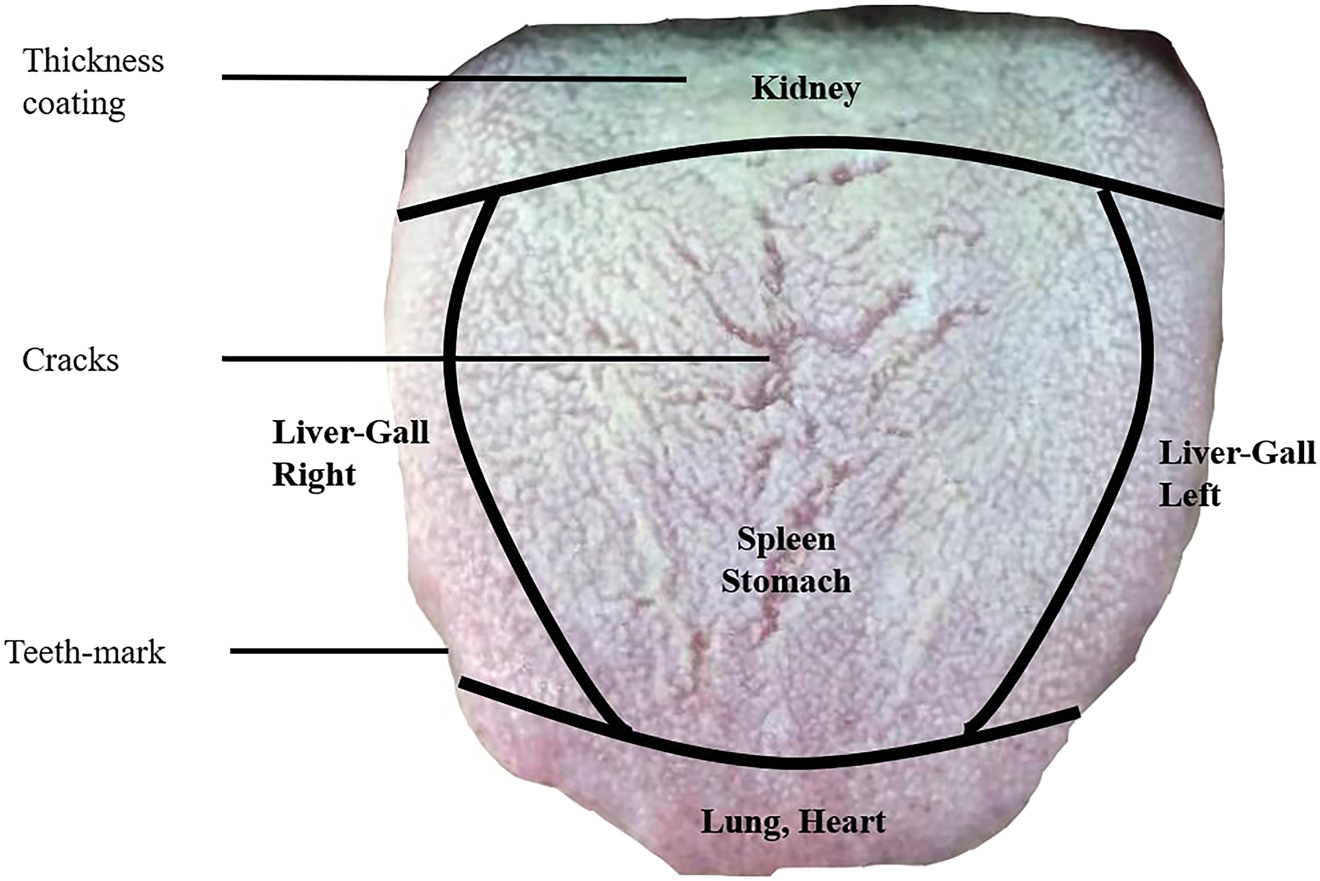

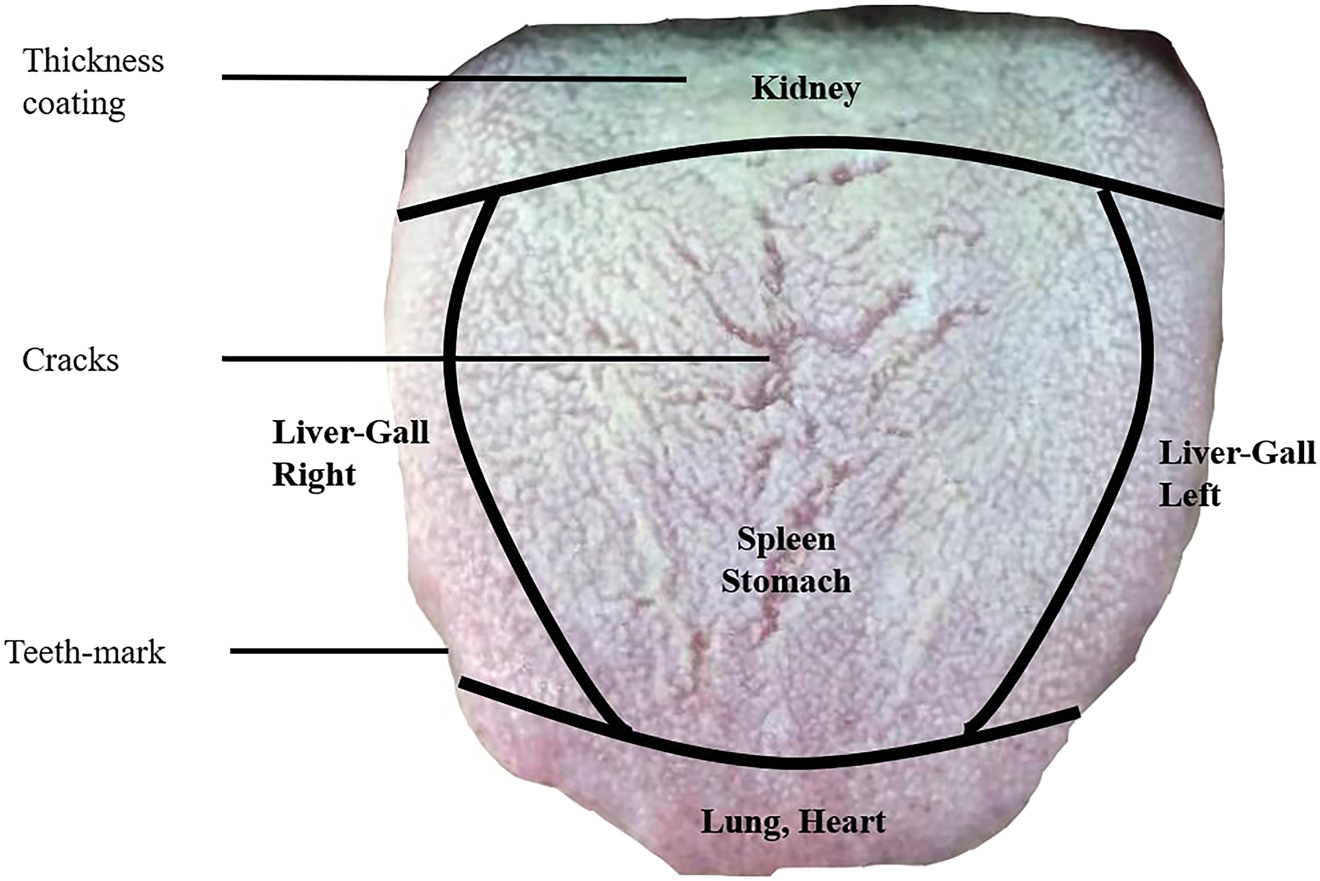

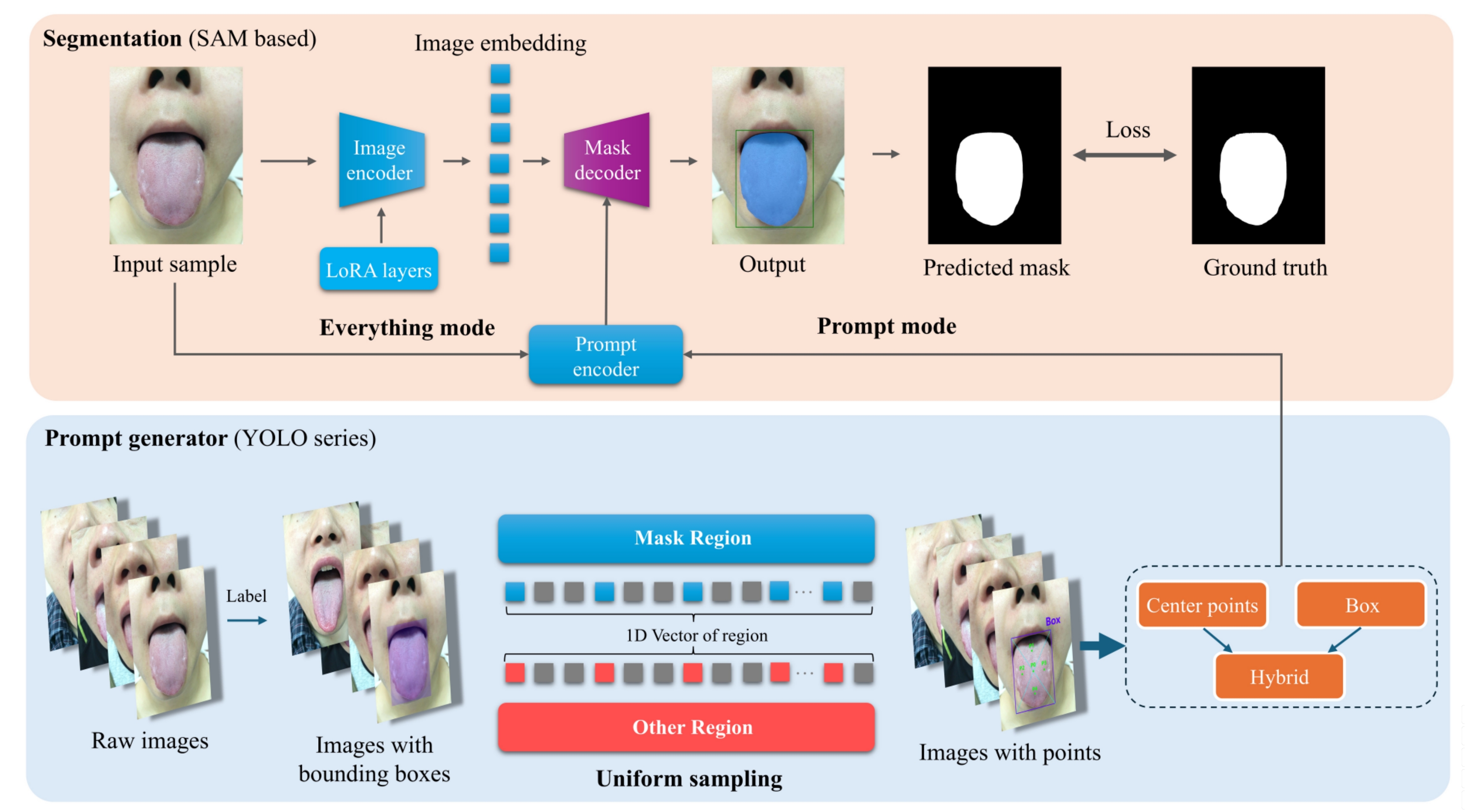

Digital tongue image analyses for health assessment

Medical Review, 2021 project page / paper In this work, we reviewed recent advances in computerized tongue diagnosis for health assessment, covering key components such as tongue image acquisition devices, region segmentation, feature extraction, and color correction. We compared traditional machine learning and deep learning approaches for tongue-based constitution classification, discussing their respective strengths and limitations. Finally, we highlighted current challenges and outlined future directions for the standardization and intelligent application of digital tongue diagnosis systems. |

|

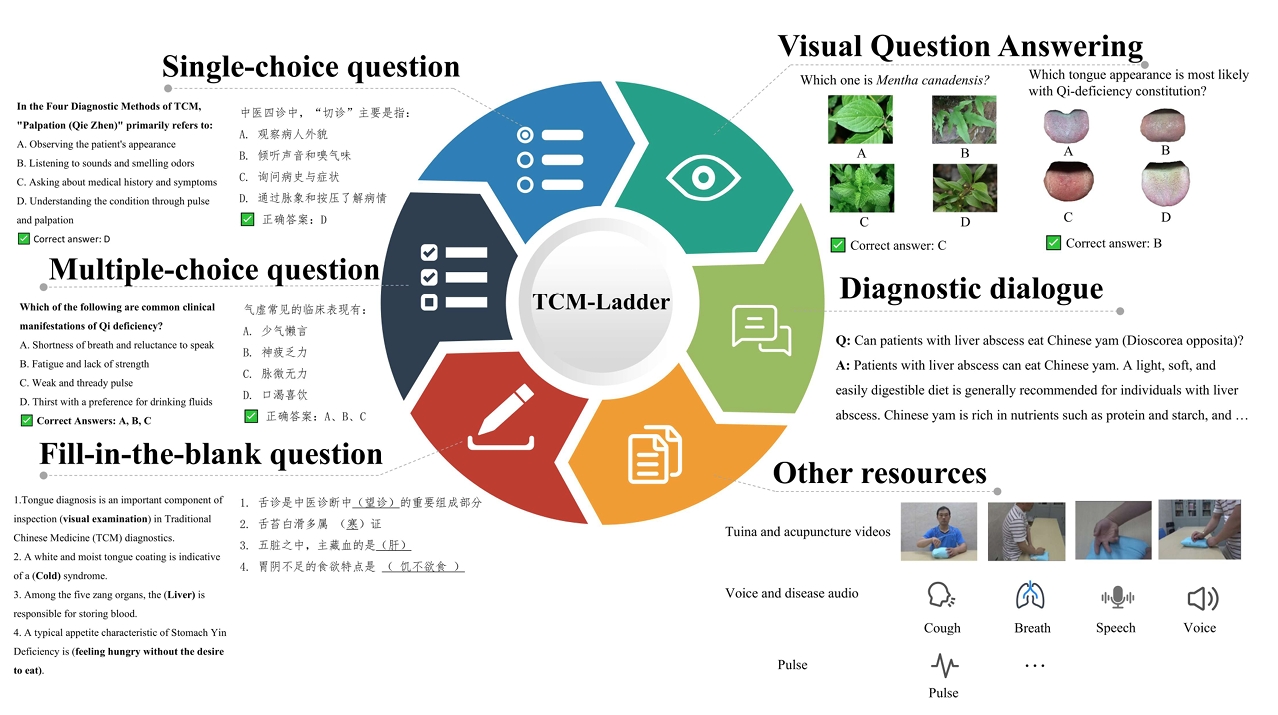

TCM-Ladder: A Benchmark for Multimodal Question Answering on Traditional

Chinese Medicine

Under Review, 2025 project page / paper In this paper, we introduce TCM‑Ladder, the first unified multimodal question-answering benchmark for Traditional Chinese Medicine, consisting of over 52,000 questions across text, image, and video formats. We evaluate both general-purpose and TCM-specific large language models, and propose a new metric, Ladder‑Score, to assess responses based on terminology accuracy and semantic alignment. This benchmark provides a standardized platform to advance the development of multimodal LLMs for TCM. |

|

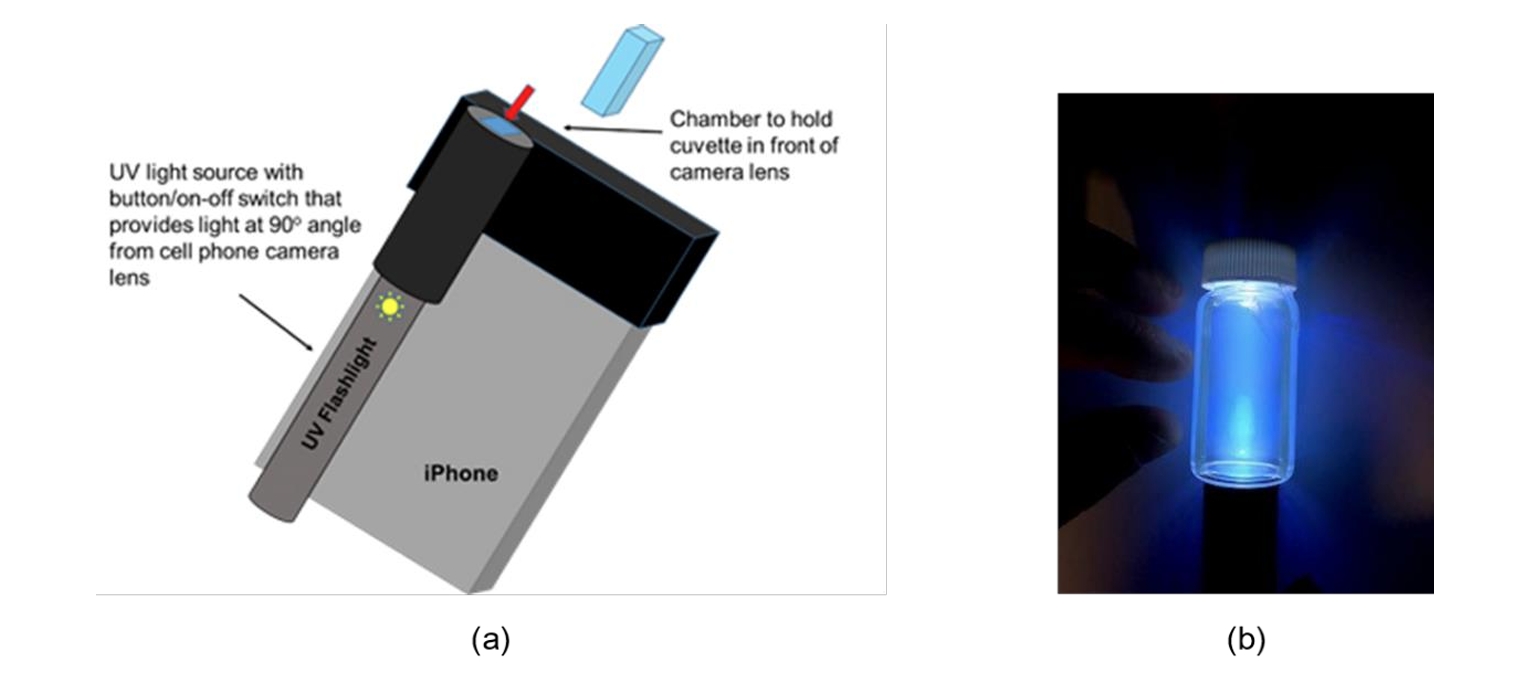

Assessing Environmental Oil Spill Based on Fluorescence Images of Water Samples and Deep Learning

Journal of Environmental Informatics, 2023 project page / paper We developed a portable, plug-and-play device and deep learning model that estimate oil concentration in water using fluorescence images captured by an iPhone. Trained on ~1,300 solvent-extracted samples, the model combines a CNN with a histogram bottleneck block and attention mechanism to extract spectral features from low-contrast images. Our system achieves high accuracy and includes a confidence estimator, offering a practical, near-real-time solution for oil spill responders. |

|

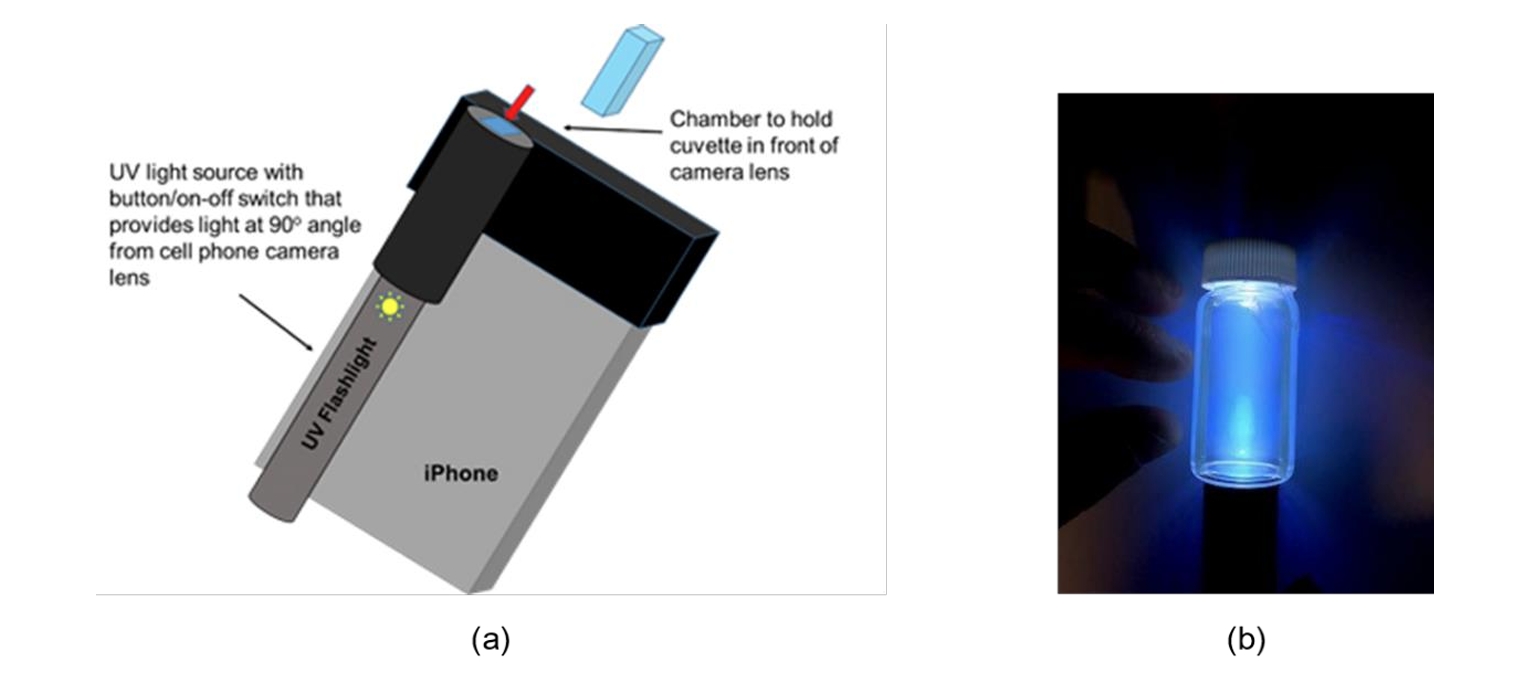

Real-Time Oil Spill Concentration Assessment Through Fluorescence Imaging and Deep Learning

Under Review, 2025 project page / paper We present a real-time oil spill assessment system that integrates fluorescence imaging, deep learning, a mobile app (Oilix), and a data management platform to overcome the limitations of traditional monitoring methods. Using a MobileNetV3-large-based model trained on 1,530 curated fluorescence images from two oil types, our approach achieves high accuracy with an R² of 0.9958 and RMSE of 9.28. Oilix enables rapid, cost-effective, and scalable field assessments, enhancing environmental monitoring and emergency response capabilities. |

|

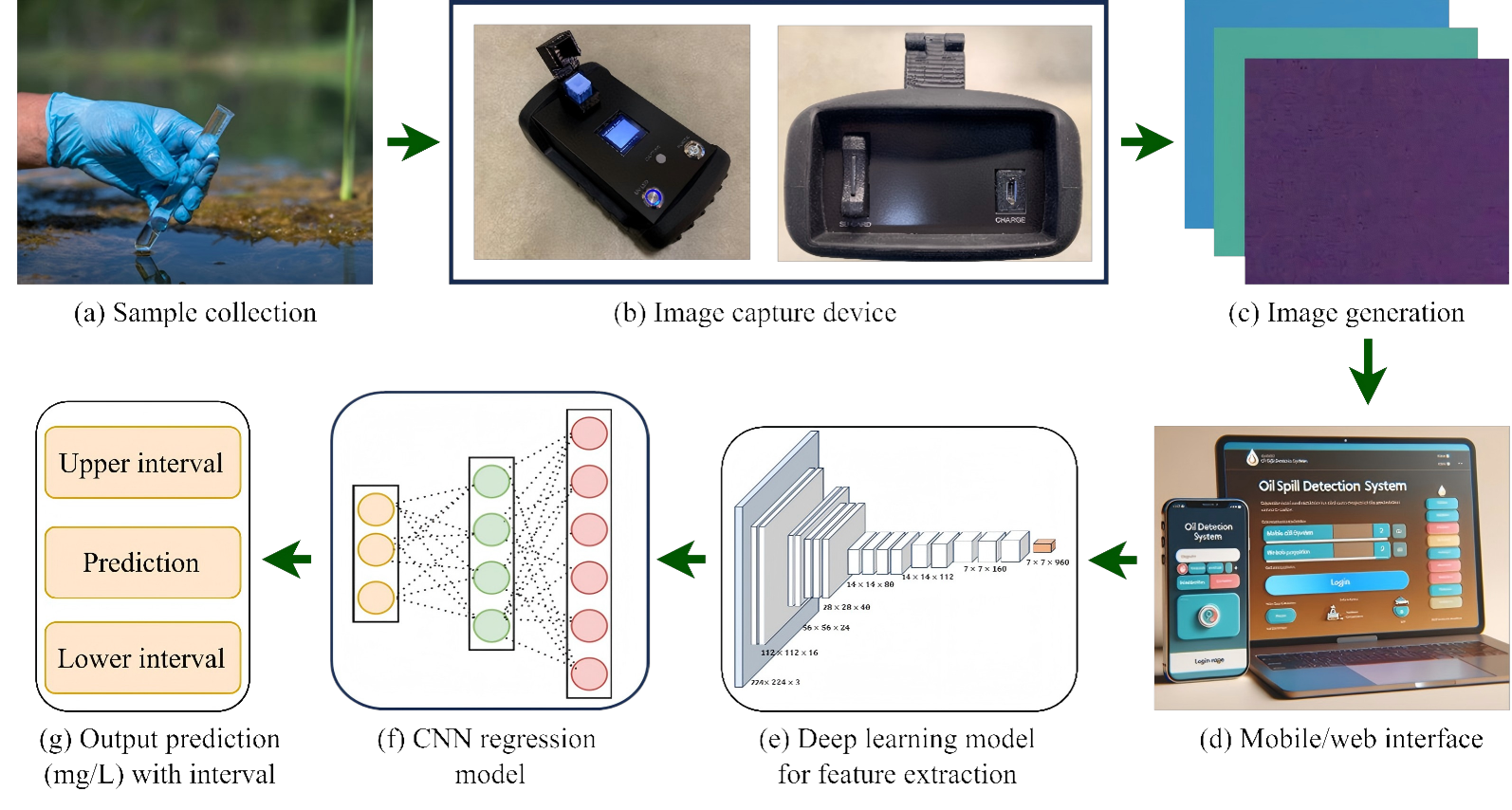

TOM: A Universal Tongue Optimization Model for Medical Tongue Image Segmentation

MIC Conference, 2024 project page / paper In computerized intelligent tongue diagnosis, tongue segmentation is usually used as an input for subsequent downstream tongue diagnosis tasks, and the accuracy of tongue segmentation directly affects the results of tongue diagnosis. Hence, tongue segmentation is of great significance for computerized tongue diagnosis. In this paper, we propose a universal tongue optimization model (TOM) for tongue segmentation. Our method has higher segmentation accuracy and segmentation speed than previous segmentation methods. Even in a complex tongue image dataset with artificially added noise, the TOM method still achieves 97.85% mIoU and saves more than 25% inference time. Moreover, we developed an online tongue segmentation tool (http://www.itongue.cn) based on TOM, which allows doctors to obtain tongue segmentation without coding. |

|

@2025 Jiacheng Xie |